Tiffany Chen

Marketing

Share this article

This analysis examines whether llms.txt is valuable or just AI hype, presenting evidence from companies like Vercel (10% of signups from ChatGPT), Google (including llms.txt in their A2A protocol), and Anthropic (specifically requesting llms.txt implementation). The article demonstrates that llms.txt provides real benefits for AI retrieval, with data showing LLMs actively crawling these files and companies like Windsurf highlighting token and time savings.

If you've been following AI trends, you've probably heard of llms.txt.

This new standard for making web content more accessible to large language models (LLMs) has gained significant traction in 2025.

But with any emerging AI technology, a fair question arises: is llms.txt actually valuable, or just AI hype?

Understanding the skepticism

Some critics question whether llms.txt truly improves AI retrieval and accuracy or boosts traffic. They mention there's been no universal commitment from all LLM providers to parsing llms.txt, and suggest that following general SEO principles like having a sitemap is sufficient.

Let's address these points with real-world evidence, collected through our firsthand experience with Generative Engine Optimization (GEO) data and our work with leading AI customers like Anthropic, Cursor, and Windsurf.

1. SEO is very different from LLM optimization

The first misconception is that traditional SEO practices are sufficient for AI-driven discovery. The data tells a different story:

- Vercel reports that 10% of their signups now come from ChatGPT as a result of calculated GEO efforts (not SEO)

- Google—who shaped the modern era of SEO—included a llms.txt file in their new Agents to Agents (A2A) protocol

LLMs have fundamentally different needs than traditional search engines. They benefit from clarity, context, and structure in ways that conventional search doesn't.

At Mintlify, we've observed that documentation is now 50% for humans and 50% for LLMs, each requiring different optimization approaches.

2. Simplified text files improve AI retrieval

The second key insight is that given how LLMs work, having structured, simplified text files is fundamentally beneficial:

- Clearer structure means fewer tokens, faster responses, and lower costs

- The simpler format reduces the computational effort required for LLMs to extract meaning from your content

- Companies like Windsurf have highlighted that llms.txt saves time and tokens when agents don't need to parse through complex HTML

3. Major LLM providers are committed to llms.txt

The most critical evidence for llms.txt's value comes from the industry leaders themselves.

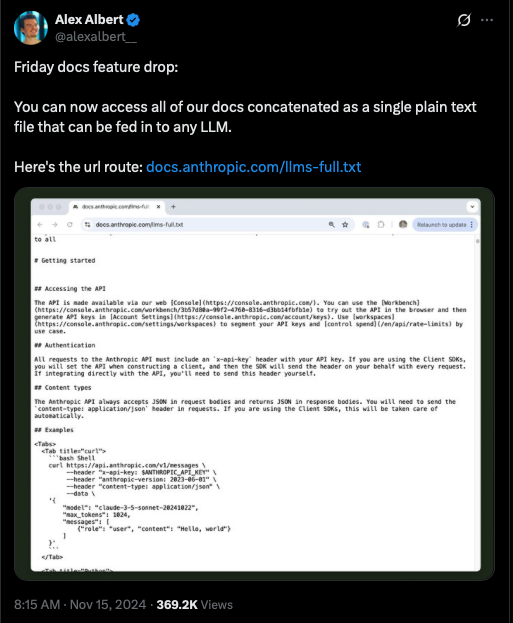

Anthropic, creator of Claude, specifically asked Mintlify to implement llms.txt and llms-full.txt for their documentation. This request demonstrates a clear commitment to these standards from one of the leading AI companies.

This isn't just anecdotal evidence. Profound, a company specializing in tracking Generative Engine Optimization (GEO) metrics, has collected data showing that models from Microsoft, OpenAI, and others are actively crawling and indexing both llms.txt and llms-full.txt files.

When the companies building the leading LLMs are actively implementing these standards, it signals their impact beyond theoretical arguments.

The data from Profound confirms this isn't just about following a trend—it's about measurable improvements in how AI systems interact with your content.

The rise of llms-full.txt

Interestingly, our data from Profound also reveals something unexpected: LLMs are accessing llms-full.txt even more frequently than the original llms.txt.

While llms.txt lists which pages to crawl, llms-full.txt is a single Markdown file containing the full plain text content of your site—designed for simpler, faster ingestion.

Mintlify originally developed llms-full.txt in a collaboration with Anthropic, who needed a cleaner way to feed their entire documentation into LLMs without parsing HTML. After seeing its impact, we rolled it out for all customers, and it was officially adopted into the llmstxt.org standard.

In short: it works, and it wasn't built in a vacuum.

Future-proofing your content

Today, being accessible to LLMs gives you a competitive advantage. Soon, it will become table stakes.

These standards represent a fundamental shift in how we think about content accessibility. Your audience now includes LLMs alongside humans, and optimizing for AI isn't about gaming a system—it's about ensuring your content is accurately represented.

The emerging best practices center on simplicity. Whether you're using Mintlify or building in-house, simplifying structure pays off.

That generally involves some type of simplification into markdown, like llms.txt or llms-full.txt, but it can also be other methods such as providing individual pages in markdown for easier ingestion into LLMs.

Consider not just how AI indexes your content, but how users will interact through AI interfaces. As people shift to AI-first workflows, it's more important than ever to make your website or documentation easy to reference in any LLM.

What's next?

Standards for AI optimization will continue to evolve as more companies recognize its value. Looking ahead, efforts like Model Context Protocol (MCP) are exploring how LLMs can move from reading content to directly interacting with products.

If you're interested in learning how AI leaders like Perplexity, Cursor, and Bolt.new are thinking about documentation and preparing for the future, we're happy to chat about making your content AI-ready.

More blog posts to read

Workflows: Automate documentation maintenance

Workflows let you automate documentation maintenance directly from your repository by defining triggers and instructions in Markdown, so your docs stay in sync with your product.

February 27, 2026Patrick Foster

Software Engineer

Your docs, your frontend, our content engine

Enterprise teams can now own their docs frontend while Mintlify handles the content engine, AI, and editor behind the scenes.

February 19, 2026Hahnbee Lee

Co-Founder

Tiffany Chen

Marketing