Han Wang

Co-Founder

Share this article

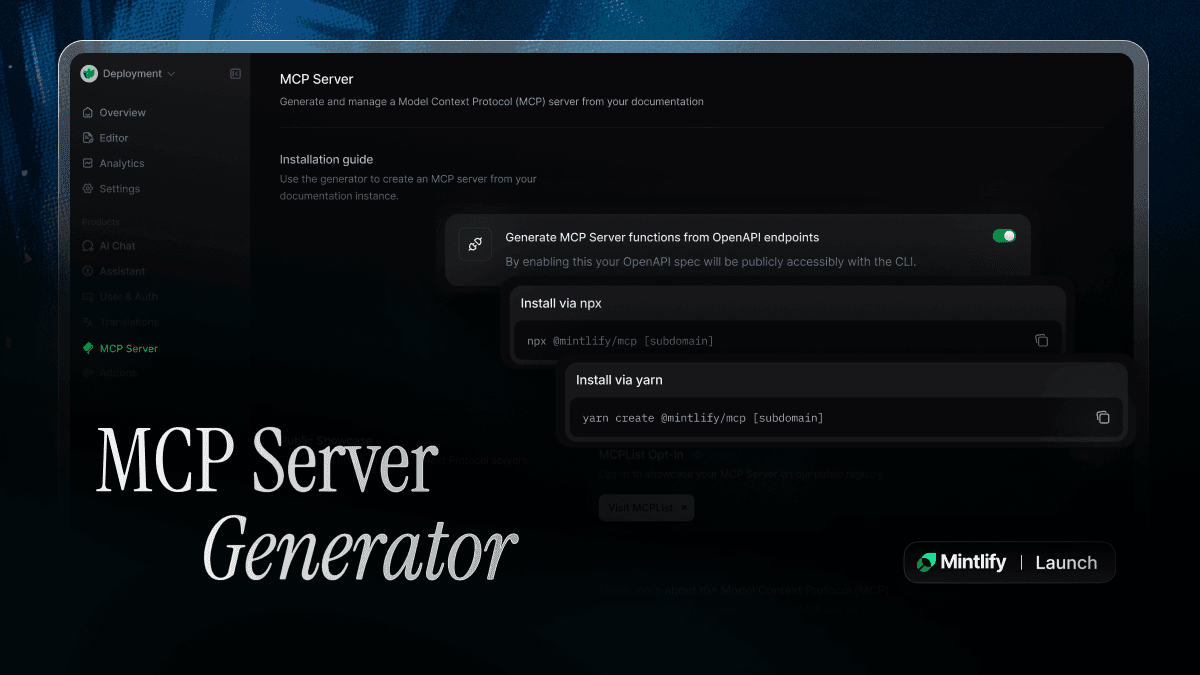

Mintlify now automatically generates Model Context Protocol (MCP) servers from your documentation, enabling AI applications to query your docs in real-time and execute API calls on behalf of users. This bridges the gap between AI tools and your product, allowing for both contextual answers and real-time API interactions while positioning your documentation in AI registries for broader discoverability.

Everything that you need to build a Model Context Protocol (MCP) server is already available in your docs.

As MCP becomes more widely adopted by the day, we wanted to make it easy for everyone to build that bridge between AI and your APIs.

That's why we've launched the ability to generate MCP servers directly from your documentation.

What is an MCP Server?

Model Context Protocol is a standard released by Anthropic in November 2024 to simplify how applications can provide context to large language models (LLMs).

It enables LLMs to use external tools & services, with MCP servers exposing your data to AI and MCP clients allowing access to that data. For example, you can use an MCP client like Windsurf or Cursor to search for a pull request by using GitHub's MCP server.

While MCPs have recently gained intense traction as a way to embed products into AI ecosystems, best practices are still evolving.

We believe the most effective approach is to dynamically generate MCP servers based on existing data structures, like your OpenAPI spec. With a simple CLI installation, Mintlify enables you to do just that—automatically setting up an MCP server that allows AI applications to interact with your docs in these key scenarios:

- General search & answer returning, when users want to get a contextual answer

- Real-time API querying, when users want AI to execute actions on their behalf

Use Case #1: Making docs AI-accessible for faster answers

Using AI to get fast, contextual answers is quickly becoming the default method over traditional search.

Your product documentation needs to fit into contextual answer workflows in two ways:

- Real-time AI search – When someone asks an LLM a question about your product, such as "How do I authenticate with Foo's API,” the AI application needs a structured way to retrieve relevant information.

- Static context loads – When users know they need an answer from specific docs, they need to be able to easily load them into the context windows of the AI app.

To make AI search easier for users, you can now leverage:

- Structured formats – Markdown versions of documentation allow people can more easily load context into AI apps.

- Precompiled full-context files – An /llms-full.txt file provides AI apps with a single reference to your entire documentation for a one-time static load.

- AI-friendly indexing – An /llms.txt file acts as a sitemap for AI, helping general purpose LLMs more efficiently index your content and surface it when people are searching.

- MCP servers for docs — Now, LLMs can query and provide up-to-date responses on your documentation content without users having to manually load into context windows.

Together, these elements ensure that documentation isn't just human-readable but also optimized for AI to retrieve and parse for end users.

Use Case #2: Real-time querying via OpenAPI

Beyond returning answers, an exciting and increasingly popular use case is how AI apps can interact with APIs directly.

Instead of just answering “How do I authenticate with Foo's API?”, an AI app equipped as an MCP client could take it a step further—actually generating an authenticated request or executing an action on the user's behalf.

By exposing your OpenAPI spec via an MCP server, you allow AI apps to:

- Provide real-time API guidance – Users can ask AI how to perform an action, and it can pull the exact API reference from your documentation.

- Enable AI-assisted API calls – Instead of just showing an endpoint, AI apps can generate a ready-to-use API request.

- Integrate API execution into workflows – With proper authentication, an AI app could even execute actions, such as initiating a Stripe refund or retrieving live data from your service.

MCP servers for documentation marks a major upgrade for the user experience, from AI simply returning answers to actually executing requests on their behalf.

Why this matters

MCP is only three months old, and we're just beginning to see its potential.

More than just a technical shift, this is a distribution opportunity. Getting your MCP server listed in registries like OpenTools MCP Server Registry, mcp.run, Cursor.directory, and Windsurf.run makes it easier for users to find and integrate with your product.

Optimizing documentation for both human users and AI isn't just a nice-to-have—it's becoming table stakes. We're quickly moving toward a future where AI agents handle tasks on behalf of users, and an MCP server is a critical piece of that future.

If you're interested in learning more about MCP & documentation, get in touch with our team.

More blog posts to read

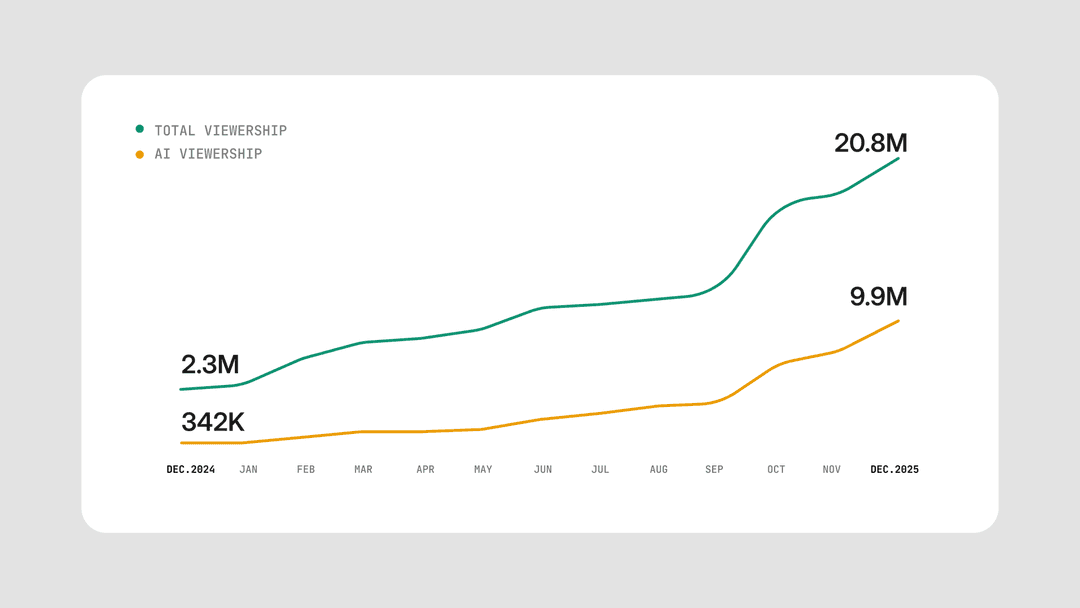

Almost half your docs traffic is AI, time to understand the agent experience

Nearly half of all the traffic to your documentation site is now AI agents. How can you create a great agent experience?

February 17, 2026Peri Langlois

Head of Product Marketing

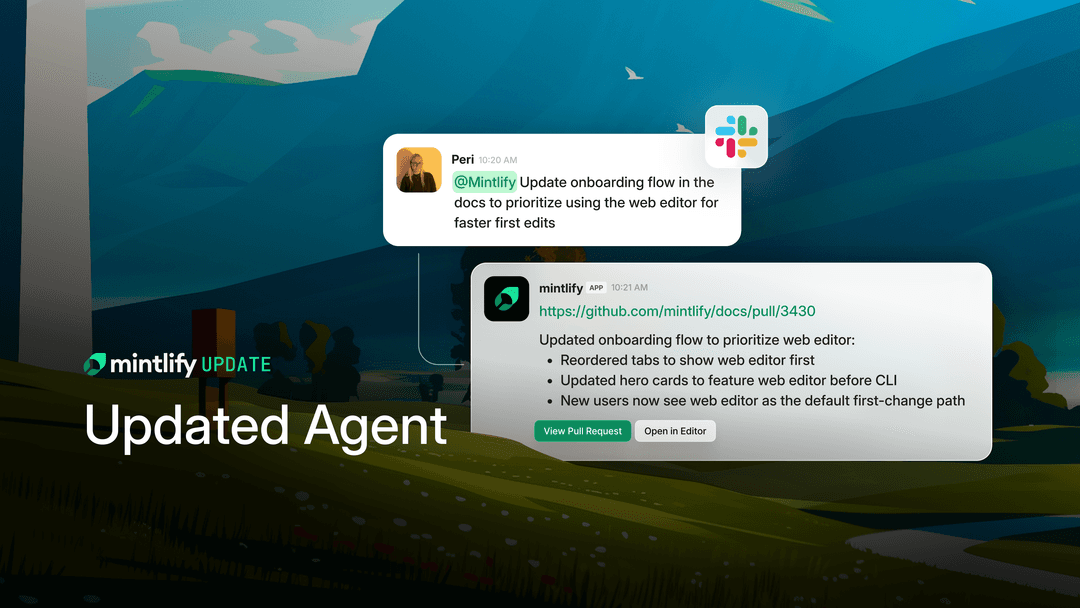

@mintlify for better docs, faster

The improved agent means better contributions from my team and fewer docs tasks stuck in the backlog.

February 16, 2026Ethan Palm

Technical Writing

Han Wang

Co-Founder