Nick Khami

Engineering

Share this article

This debugging story reveals how Cloudflare's automatic compression settings broke HTTP streaming for browser and node-fetch requests while cURL worked fine, causing 10+ second latency issues. The solution involved disabling compression in Cloudflare's dashboard, highlighting the importance of infrastructure visibility and the hidden complexities of "helpful" automation in production systems.

The Problem

We recently encountered a frustrating issue with HTTP response streaming at Mintlify. Our system uses the AI SDK with the Node stream API to forward streams, and suddenly things stopped working properly. The symptoms were confusing: streaming worked perfectly with cURL and Postman, but failed completely with node-fetch and browser fetch.

Initial Investigation

Our first hypothesis centered around stream compatibility issues. We suspected the problem might be related to how the AI SDK responds using web streams versus Node streams, particularly around HTTP/2 compliance. Web streams have known issues with HTTP/2, while Node streams are compliant.

The Cloudflare Worker Bandaid

While investigating, we discovered an odd workaround. We set up a Cloudflare worker as middleware between our server and clients:

// Cloudflare Worker that acts as a CORS-enabled streaming proxy

export default {

async fetch(request, env, ctx) {

// Handle preflight CORS requests

// ....

// Extract request body for non-GET requests

const body = ['GET', 'HEAD'].includes(request.method) ? null : request.body;

// Create headers that mimic a real browser request

// ....

// Forward the request to the target URL

const response = await fetch(request.url, {

method: request.method,

headers: proxyHeaders,

body: body,

});

// Copy response headers and enable CORS

const responseHeaders = new Headers(response.headers);

responseHeaders.set('Access-Control-Allow-Origin', '*');

// Create streaming response to handle large payloads

const { readable, writable } = new TransformStream();

response.body?.pipeTo(writable);

return new Response(readable, {

status: response.status,

statusText: response.statusText,

headers: responseHeaders,

});

},

};

This worker simply received the stream and responded with it. Somehow, this completely fixed the issue, which made no sense to us at the time.

Ruling Out HTTP Versions

We quickly invalidated our HTTP/1 vs HTTP/2 suspicion by using cURL with the --http1.1 flag. Even when making HTTP/1 requests, things streamed properly. Throughout the entire debugging process, cURL consistently worked as expected.

Header Stripping Suspicions

One thing that stuck out was that our egress systems (ALB and Cloudflare) were stripping these headers:

'Transfer-Encoding': 'chunked',

'Connection': 'keep-alive',

'Content-Encoding': 'none',

Since browsers frequently have issues with these headers, and given that Postman and cURL worked while node-fetch and browser fetch didn't, we thought this might be the culprit.

Learning About Dynamic Responses

During this investigation, I learned more about "dynamic" responses and HTTP streaming in general. We explored whether an egress proxy was buffering the entire response to assign a content-length header, but that wasn't happening. It was valuable to develop better intuition around what HTTP streaming actually means.

The Breakthrough

After about 3 hours of making no progress, only Lucas made any headway. His previous Cloudflare experience gave him the intuition that a worker might fix the issue, which led to our temporary solution.

We implemented the patch fix and went to lunch, but had to deal with another issue: changing the IP to the Cloudflare worker triggered our IP-based rate limiter. After fixing that, we called it a day around 8pm.

Later that night, Lucas had an epiphany. Thinking about why cURL worked but fetch didn't, he consulted Claude about the differences between these tools. Claude walked him through a crucial insight: when Lucas examined the request headers, he noticed that cURL wasn't sending an Accept-Encoding header by default, while browsers always do. This missing header was the red flag that led Claude to explain that "cURL doesn't allow compressed responses without the --compressed flag."

As Claude pointed out, this behavior difference is key:

- Browser requests include Accept-Encoding: gzip, deflate, br by default

- cURL requests omit Accept-Encoding unless you use the --compressed flag

- Without the Accept-Encoding header, servers won't compress the response

Bingo. The problem was compression.

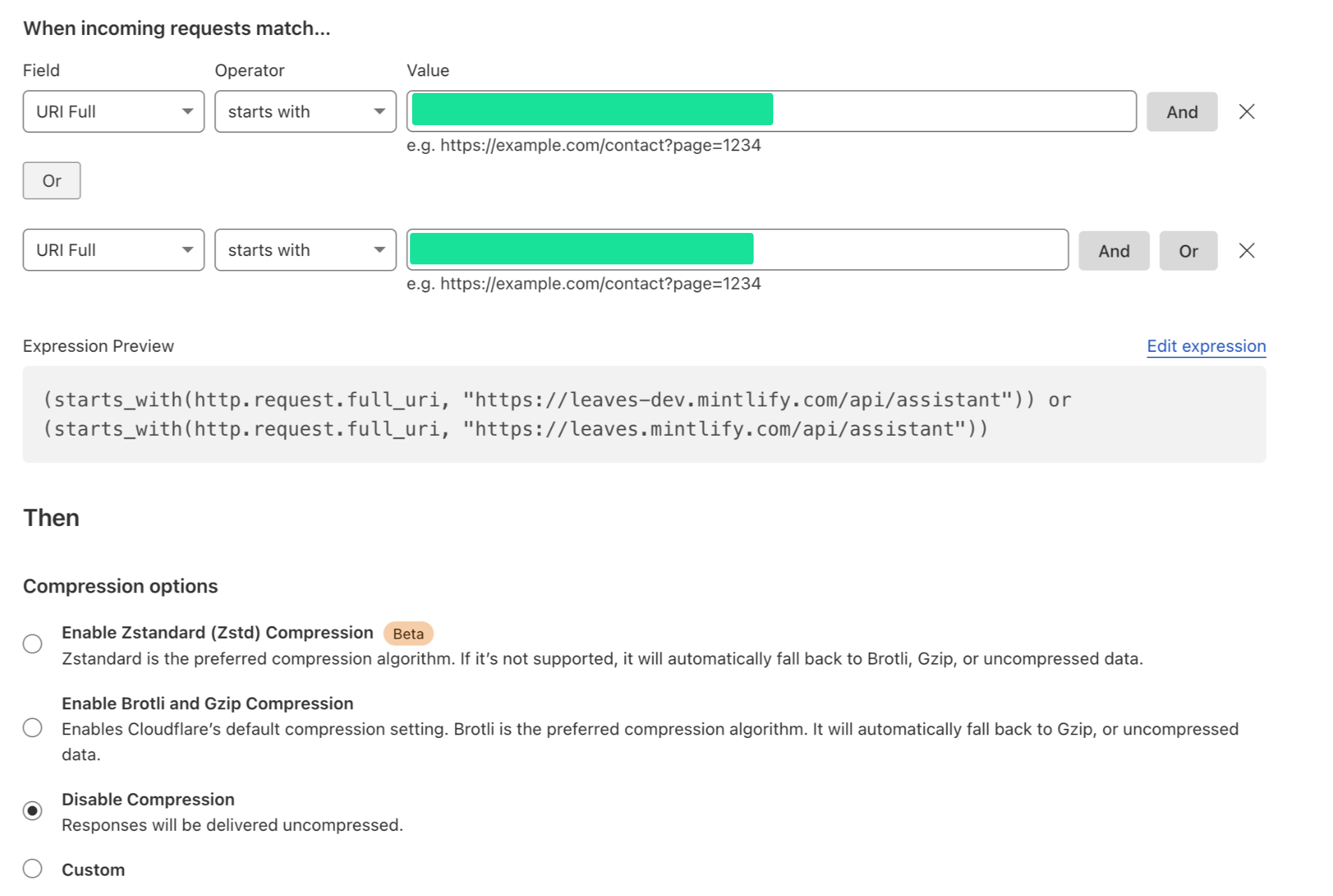

The Solution

Lucas went into the Cloudflare dashboard, disabled compression, and everything worked perfectly. A simple solution to what had been a very annoying problem causing 10+ second latency on requests.

The Embarrassing Realization: A Familiar Problem in Disguise

This was embarrassingly familiar. Denzell and I had actually dealt with this exact issue before at Trieve, fixing it in PR #2002: remove compression for chat routes. The reason we didn't immediately recognize it was what I call "magic obfuscation" - when you've fixed something before but the context makes it unrecognizable.

In fact, Mayank, another team member at Mintlify who worked with Trieve from the customer side, had discovered a similar streaming issue with this message:

hey folks! were there any changes to the message apis yesterday? streaming doesn't seem to be working as intended. maybe github.com/devflowinc/trieve/pull/1999/files?

The key difference was visibility. Since Trieve is source available, Mayank could look at recent commits and quickly identify the issue. In our current Mintlify case, we had no such visibility into what changed, which made the debugging process exponentially more difficult.

When Cloudflare's Magic Becomes a Nightmare

The strangest part is that streaming worked correctly one day and stopped working the next, without any changes on our end. ALB configuration stayed the same, Cloudflare wasn't touched, and none of our code changed. Somehow, Cloudflare had silently enabled compression, and we have no idea why.

This highlights a fundamental issue with Cloudflare's approach to infrastructure management. While their "intelligent" defaults and automatic optimizations can be helpful for simple use cases, they create serious problems for production systems that require predictable behavior. The fact that a critical setting like compression can change without explicit configuration updates makes debugging nearly impossible.

In a properly designed infrastructure-as-code environment, this issue would have been immediately obvious. A git diff would show exactly when compression was enabled, by whom, and why. Instead, we spent hours chasing ghosts because Cloudflare's dashboard-driven configuration model obscures the audit trail of changes.

This isn't just about compression—it's about the broader pattern of "helpful" automation that removes visibility and control from engineering teams. When debugging production issues, the last thing you want is uncertainty about whether your infrastructure configuration matches what you deployed.

Lessons Learned

- Compression breaks HTTP streaming - This is now permanently etched in my brain

- cURL vs fetch behavior differences - cURL doesn't allow compressed responses without --compressed flag

- Cloudflare workers as debugging tools - Sometimes adding middleware can help isolate problems

- Source availability matters - Having access to change logs makes debugging much easier

- Team knowledge sharing - Lucas's Cloudflare experience was crucial to finding both the workaround and the real solution

If I'm ever on a team using Cloudflare again, we'll make sure compression is disabled for any HTTP streaming endpoints from day one.

More blog posts to read

Mintlify acquires Helicone to redefine AI knowledge infrastructure

Mintlify is acquiring Helicone, the open source LLM observability platform and AI gateway. Helicone's team has been building at the frontier of agentic AI, and they're joining to help us build the next generation of AI knowledge infrastructure.

March 3, 2026Han Wang

Co-Founder

Why more product managers are switching to Mintlify

Understand why product managers are using Mintlify to help support org-wide goals.

March 3, 2026Peri Langlois

Head of Product Marketing

Nick Khami

Engineering