Emma Adler

Contributor @ Hackmamba

Share this article

AI is fundamentally transforming how documentation is written, structured, and consumed in 2025, with LLMs becoming the primary way developers discover and interact with product information. Documentation teams must now optimize for AI readers through structured content, llms.txt files, and Model Context Protocol integration to ensure their content surfaces accurately in AI interfaces.

Coming into 2025, there were a number of predictions as to how AI would impact what we do and how we do it. And just halfway through the year, those predictions are already reshaping our daily tools and practices faster than anyone expected, across nearly every domain.

It's already clear that by the end of 2025, documentation that isn't structured for AI readers will struggle to surface in search and across every major interface developers use.

In this article, we will cover how AI is actively transforming the way documentation is written, structured, and used, and what this means for the future of content creation and developer experience.

Docs are now distribution channels

In 2025, the first place developers find your product is through AI responses.

Guillermo Rauch tweet on ChatGPT

Large Language Models (LLMs) like Claude, ChatGPT, and Perplexity are now crawling your docs. They are also quoting them, summarizing them, and embedding them in chat interfaces, developer tools, and customer support flows.

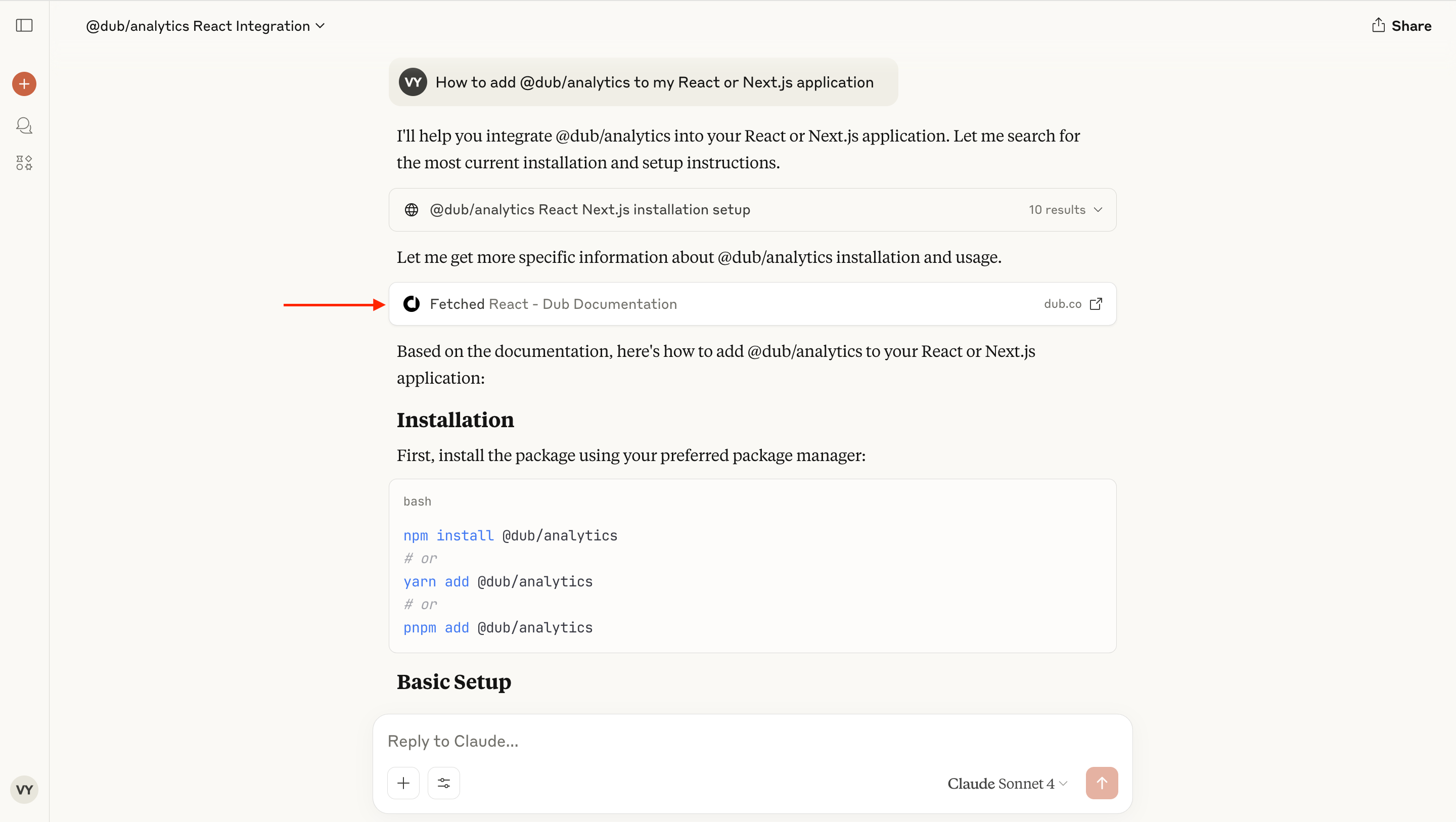

For example, when a developer asks Claude, "How to add @dub/analytics to my React or Next.js application?" the model pulls directly from Dub documentation to generate step-by-step instructions.

Claude response for user query

This shows that these AI systems currently rely on your documentation to provide accurate code suggestions and explanations, meaning users can get immediate, contextual help without needing to go to your docs. So if your documentation is optimized correctly, it can become your strongest distribution channel.

On the other hand, if your docs are unclear, outdated, or poorly structured, the AI's output will be incorrect.

For documentation teams, this shift introduces LLMs as a new reader. That means your content not only supports users; it now fuels search, shapes product discovery, and powers AI interfaces.

This has profound implications on both the accuracy of your docs content and how it is written.

Structure is the new SEO

If you want LLMs to interpret the content in your docs correctly, design your docs for how machines read.

“Beyond traditional SEO requirements, your documentation needs to be optimized for AI applications to better understand, navigate, and present your product to users,” says Han Wang, co-founder of Mintlify.

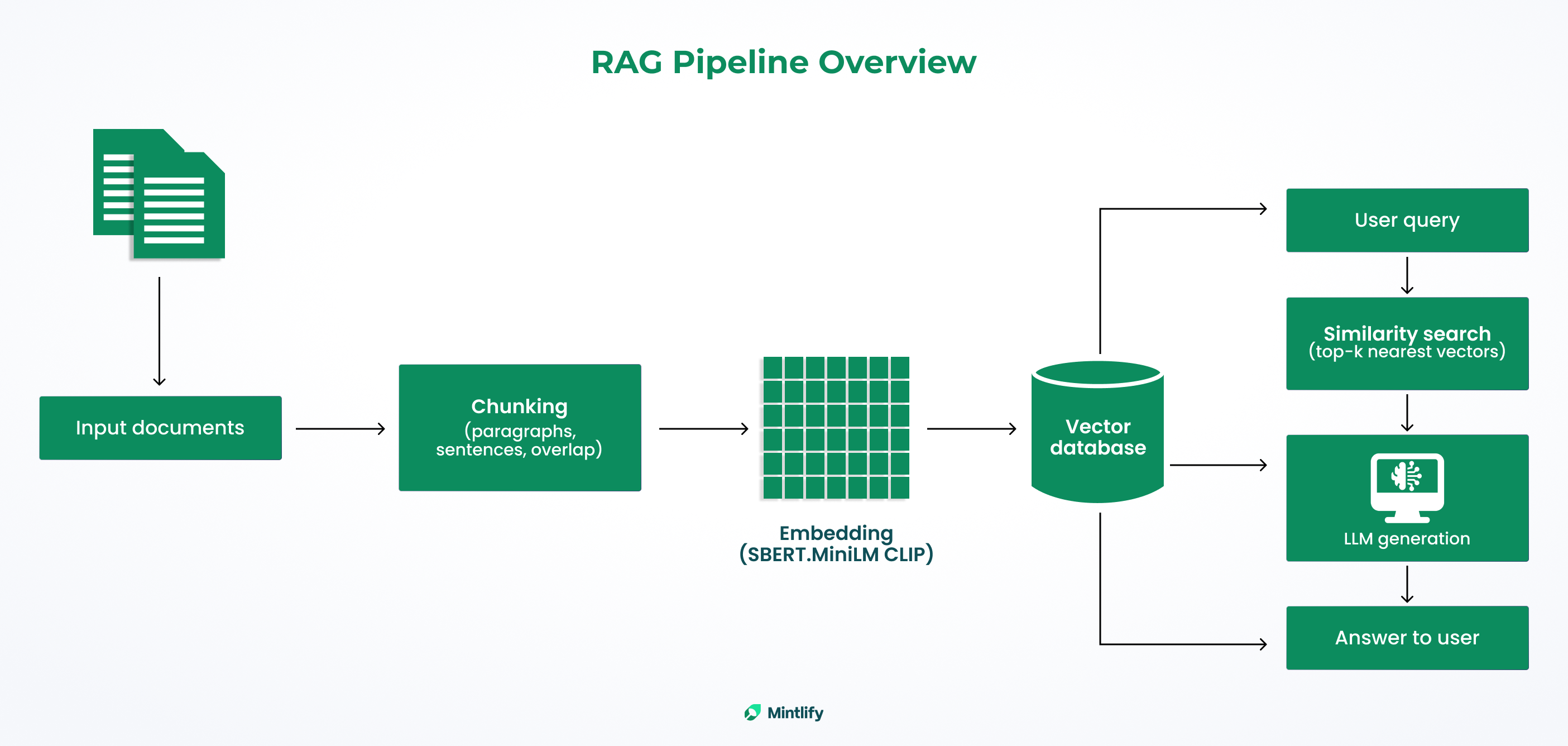

In 2025, passage-level indexing is the norm. LLMs break your docs into chunks as small as a few lines, then return results based on vector similarity. For instance, when a user asks a question, models like Claude or ChatGPT search across these chunks to surface the most semantically relevant response.

How AI models read documents

That means the structure of your docs directly impacts AI performance: what gets read and the quality of response the AI model produces. This structure involves using meaningful subheadings, code blocks, tables, and summaries so that the LLMs can identify and retrieve content more reliably.

Knowing how to improve LLM readability is no longer optional.

llms.txt is the new sitemap

By the end of 2025, any doc site without llms.txt will struggle to surface in AI interfaces.

The llms.txt file is now a de facto standard, first proposed in September 2024 by Jeremy Howard, co-founder of Answer.AI. It tells AI readers what to read and how to prioritize. This file provides a structured outline of your docs, complete with summaries, links, and semantic hints.

This file is already being crawled by ChatGPT, Claude, and other AI models as a signal of where to begin reading. Here is a sample of how the file is structured:

# AI Document Processing Platform

> Learn how to process documents, extract insights, and automate business workflows using AI-powered tools.

## How to guides

- [Upload PDFs](https://example.com/docs/pdf-upload): Process multiple documents including PDFs

- [Analyzing User Behavior](https://example.com/docs/user-behavior): Understand patterns and optimize based on usage data

## Use Cases

- [Content Creation with AI](https://example.com/docs/content-generation): Automate blog posts

- [Data Extraction Workflows](https://example.com/docs/data-extraction): Extract structured data from unstructured files

- [Managing Business Processes](https://example.com/docs/business-process): Use AI to manage time-consuming business tasks

There's also llms-full.txt, a full Markdown dump of your site, which is now used by customer-facing AI agents to answer support queries with higher accuracy.

Teams like Anthropic, Windsurf, and Dub already use llms.txt to shape how their docs appear inside AI interfaces. Most modern documentation platforms auto-generate an llms.txt file, but it's also easy to create manually.

For more details on how llms.txt works and why it's important, see What Is llms.txt?. And if you're trying to decide whether it's worth implementing, this breakdown of the value of llms.txt can help.

While llms.txt tells LLMs how to read your docs, there is a new protocol that helps them query your docs directly.

Context streaming is already here

In 2025, docs without Model Context Protocol (MCP) integration struggle to provide contextual product guidance to their users.

Model Context Protocol is an open standard introduced by Anthropic that allows AI systems to retrieve structured, real-time context from external sources such as documentation, APIs, databases, and config files.

Dhanji R. Prasanna, CTO at Block (who were among the earlier adopters) had this to say: “Open technologies like the Model Context Protocol are the bridges that connect AI to real-world applications.”

Instead of relying on static embeddings or outdated snapshots, LLMs can now request up-to-date, task-specific context based on the user's intent.

MCP is already gaining traction. OpenAI supports MCP across both ChatGPT and its Agents SDK. Community platforms like OpenRouter are also experimenting with MCP-compatible sources, expanding the ecosystem around this new infrastructure layer.

Docs teams are also starting to expose OpenAPI definitions, Markdown pages, and structured config files as machine-readable context streams using MCP. Mintlify supports MCP, giving you the ability to create these servers directly from your existing documentation setup.

How to design for the AI reader

Making your docs AI-readable is already essential for discoverability, support automation, and in-product assistance. If you want LLMs to surface accurate answers from your documentation, structure, and clarity are the first places to start.

Here's a quick checklist to help you get started:

Structure for AI and people

- Use clear, descriptive headings. Replace vague titles like “Overview” with specific ones like “AuthenticationFlow” or “API Rate Limits.”

- Keep paragraphs short and focused. Aim for 3–5 lines with one idea per paragraph to help AI chunk your content cleanly.

- Add semantic formatting. Use code blocks, tables, and bullet points where appropriate.

- Stick to canonical phrasing. Reuse exact terms like “access token,” “API key,” or “retry logic” consistently. This strengthens semantic matching for AI systems.

- Keep content fresh. Keep your content up-to-date so that AI systems will provide current information about your product.

Test, validate, and iterate

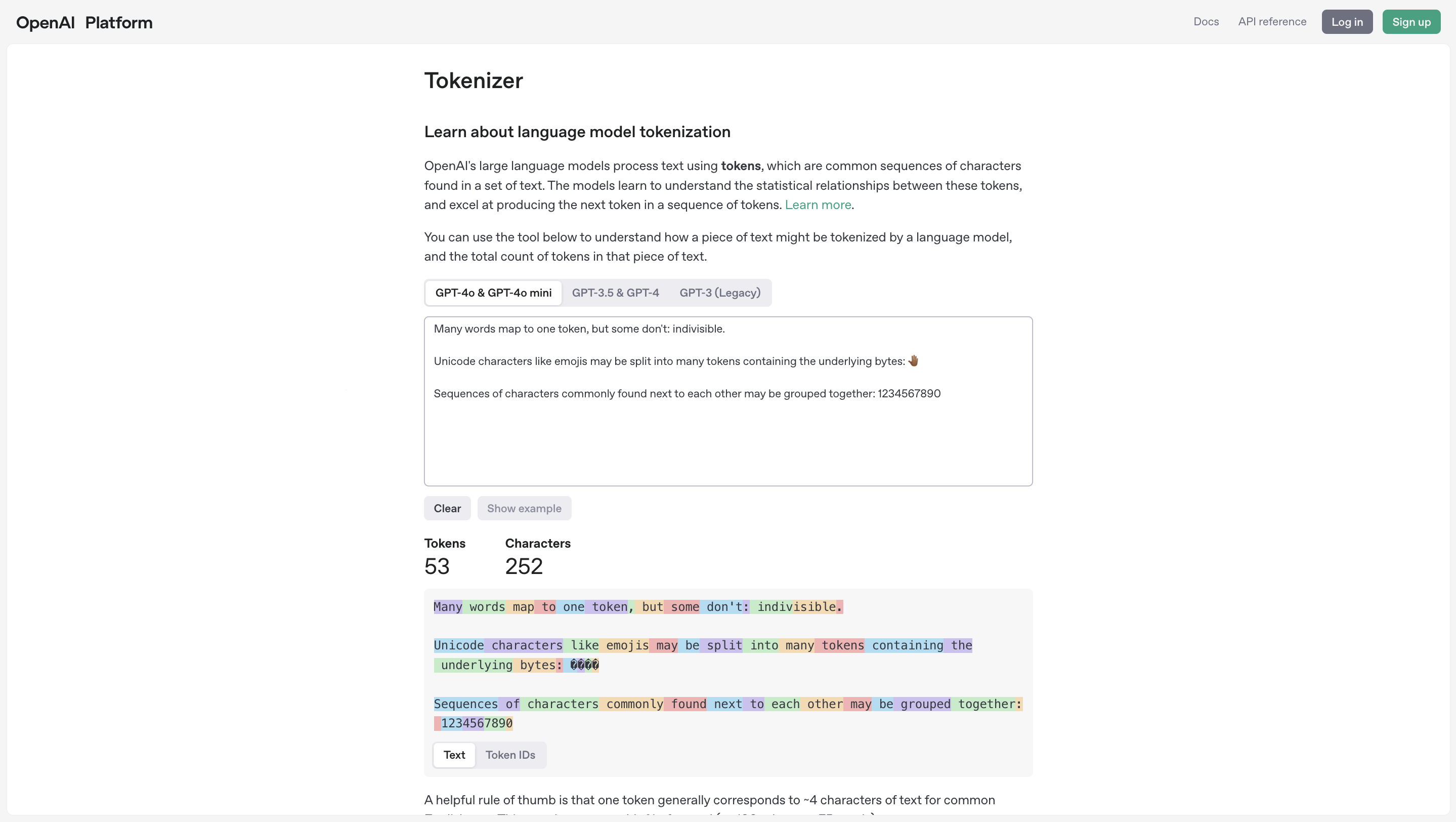

- Try your docs in the OpenAI Embedding Playground to see how chunking affects meaning and retrievability.

OpenAI Embedding Playground

- Generate and audit your /llms.txt file with tools like llmstxt.new to identify the most valuable entry points in your documents.

- Explore dynamic context by testing your docs with an MCP server.

Mintlify supports all of these optimizations out of the box, from automatic llms.txt and llms-full.txt generation to MCP support and semantic formatting best practices.

If you're serious about making your documentation AI-native, the next step is either to test it in real LLM environments or reach out to Mintlify for expert guidance.

What comes next? AI tools and code documentation trends to watch out for

From everything we've explored so far, it's clear that we're moving beyond using AI for summaries or edits to now embedding AI into every layer of the documentation lifecycle. And teams that are already creating documentation to serve both humans and machines are gaining a strategic advantage.

In the coming months, we expect to see:

- More adoption and tools for LLM-assisted documentation workflows. This includes generating changelogs from commit history, scaffolding usage guides from OpenAPI specifications, and drafting internal onboarding materials based on support conversations or code comments.

- More teams experiment with AI-native authoring environments. Our article on AI-generated documentationcovered how teams are already integrating this into production workflows and what guardrails still matter.

- MCP is just in its early stage, and we expect to see more adoption.

We'll continue to monitor AI's impact on documentation and what's changing, sharing new insights through upcoming sessions and blog posts to help you stay ahead.

If you're interested in discussing emerging trends with our docs experts, we're always happy to chat.

More blog posts to read

install.md: A Standard for LLM-Executable Installation

A proposal for a standard /install.md file that provides LLM-executable installation instructions.

January 15, 2026Michael Ryaboy

Content Strategist

Why documentation is one of the most important surfaces for marketers

A look at why documentation is one of the most influential surfaces in a technical product’s funnel, how it shapes evaluation and adoption, and why marketers should treat it as a core part of their narrative.

January 14, 2026Peri Langlois

Head of Product Marketing

Emma Adler

Contributor @ Hackmamba